The Transformer architecture revolutionized natural language processing when it was introduced in the landmark 2017 paper “Attention Is All You Need” by Vaswani et al. This blog post explores the complete architecture, breaking down each component to provide a thorough understanding of how Transformers work.

Introduction

The Transformer model, introduced in the 2017 paper “Attention Is All You Need” by Vaswani et al., marked a pivotal shift in NLP architectures. Unlike recurrent neural networks (RNNs) and convolutional neural networks (CNNs), Transformers rely entirely on attention mechanisms, eliminating the need for recurrence and convolutions. This design allows for significantly more parallelization during training and has become the foundation for models like BERT, GPT, and T5.

Core Architecture

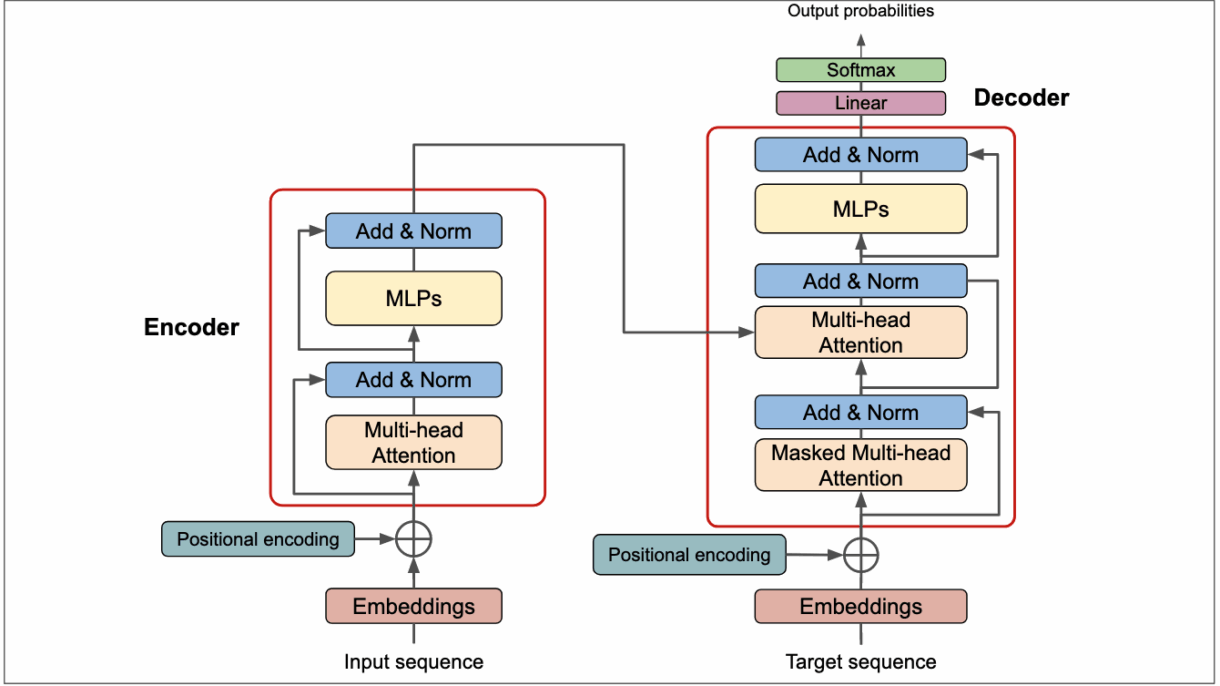

The Transformer follows an encoder-decoder architecture, but with a novel approach:

- Encoder: Processes the input sequence and builds representations

- Decoder: Generates output sequences using both the encoder’s representations and its own previous outputs

Both components are composed of stacks of identical layers, each with two main sub-layers:

- Multi-head attention mechanism

- Position-wise fully connected feed-forward network

Let’s break down each component in detail.

Encoder Architecture

The encoder consists of N identical layers (N=6 in the original paper). Each layer has two sub-layers:

1. Multi-Head Self-Attention

The first sub-layer is a multi-head self-attention mechanism. Self-attention allows the encoder to consider all positions in the input sequence when encoding a specific position, enabling the model to capture relationships regardless of their distance in the sequence.

The self-attention mechanism is calculated as follows:

- For each word in the input, three vectors are created:

- Query (Q): What the current word is looking for

- Key (K): What the current word contains

- Value (V): The actual content of the word

- For each position, scores are calculated using the query and key vectors:

Attention(Q, K, V) = softmax(QK^T / √dk) × V

The paper’s use of “scaled dot-product attention” introduces scaling by 1/√dk (where dk is the dimension of the key vectors) to prevent the softmax function from entering regions with extremely small gradients.

Multi-Head Attention

Rather than performing a single attention function, the Transformer uses multi-head attention:

MultiHead(Q, K, V) = Concat(head₁, head₂, ..., headₕ)W^O

where headᵢ = Attention(QW^Q_i, KW^K_i, VW^V_i)

In the original paper, they used h=8 parallel attention heads. Each head uses different learned linear projections for queries, keys, and values, allowing the model to jointly attend to information from different representation subspaces.

2. Position-wise Feed-Forward Network

The second sub-layer is a simple feed-forward network applied to each position separately and identically:

FFN(x) = max(0, xW₁ + b₁)W₂ + b₂

This is a two-layer neural network with a ReLU activation in between. In the original implementation, the inner layer has a dimensionality of 2048, while input and output are of dimension 512.

Residual Connections and Layer Normalization

Around each sub-layer, the encoder employs a residual connection followed by layer normalization:

LayerNorm(x + Sublayer(x))

This helps with training deeper networks and maintains gradient flow.

Decoder Architecture

The decoder also consists of N identical layers (N=6 in the original paper), but each decoder layer has three sub-layers:

1. Masked Multi-Head Self-Attention

The first sub-layer is similar to the encoder’s self-attention but includes a masking mechanism. This masking ensures that predictions for a position can only depend on known outputs at earlier positions, preserving the auto-regressive property needed during training.

2. Multi-Head Attention Over Encoder Output

The second sub-layer performs multi-head attention where:

- Queries (Q) come from the previous decoder layer

- Keys (K) and Values (V) come from the encoder’s output

This allows the decoder to focus on appropriate parts of the input sequence.

3. Position-wise Feed-Forward Network

The third sub-layer is identical to the feed-forward network used in the encoder.

Like the encoder, residual connections and layer normalization are applied around each sub-layer.

Embeddings and Positional Encoding

Input and Output Embeddings

Both the encoder and decoder use learned embeddings to convert input tokens to vectors of dimension d_model (512 in the original paper). The same weight matrix is shared between the input embedding layers and the pre-softmax linear transformation.

Positional Encoding

Since the Transformer contains no recurrence or convolution, it needs a way to understand the order of the sequence. The solution is adding “positional encodings” to the input embeddings:

PE(pos, 2i) = sin(pos/10000^(2i/d_model))

PE(pos, 2i+1) = cos(pos/10000^(2i/d_model))

Where pos is the position and i is the dimension. This function creates a unique pattern for each position, allowing the model to understand relative positions in the sequence.

Self-Attention in Detail

The self-attention mechanism is the core innovation of the Transformer. Let’s take a deeper look at how it works:

Let me continue with my detailed breakdown of the Transformer architecture.

Multi-Head Attention in Detail

Rather than performing a single attention function, the Transformer employs multi-head attention, which allows the model to jointly attend to information from different representation subspaces:

Training and Optimization

The Transformer model was trained using the Adam optimizer with the following parameters:

- Learning rate varying according to a formula: lr = d_model^(-0.5) * min(step_num^(-0.5), step_num * warmup_steps^(-1.5))

- β₁ = 0.9, β₂ = 0.98, ε = 10^(-9)

- Warmup_steps = 4000

Additional techniques used during training included:

- Residual dropout with rate 0.1

- Label smoothing with value ε_ls = 0.1

Why Self-Attention?

The paper outlines several advantages of self-attention over recurrent and convolutional layers:

- Computational complexity per layer: Self-attention has lower complexity when the sequence length is smaller than the representation dimensionality.

- Amount of computation that can be parallelized: Self-attention requires a fixed number of sequential operations (O(1)), whereas recurrent layers require O(n) sequential operations.

- Path length between long-range dependencies: Self-attention creates direct connections between any two positions, resulting in maximum path length O(1), while recurrent layers require O(n) steps.

This table compares the computational characteristics:

| Layer Type | Complexity per Layer | Sequential Operations | Maximum Path Length |

|---|---|---|---|

| Self-Attention | O(n²·d) | O(1) | O(1) |

| Recurrent | O(n·d²) | O(n) | O(n) |

| Convolutional | O(k·n·d²) | O(1) | O(log_k(n)) |

Cross-Attention Mechanism

In the decoder, the second attention layer performs cross-attention, which is a crucial bridge between encoder and decoder:

- Queries (Q) come from the previous decoder layer

- Keys (K) and Values (V) come from the encoder’s output

This architecture enables the decoder to focus on appropriate parts of the input sequence, creating a context-aware generation process.

The mathematical formulation is the same as self-attention:

CrossAttention(Q, K, V) = softmax(QK^T / √dk) × V

The difference lies in the source of Q, K, and V vectors.

Implementation Details

The original Transformer model had the following hyperparameters:

- Encoder and decoder each had N=6 identical layers

- d_model = 512 (dimensionality of embeddings)

- d_ff = 2048 (dimensionality of feed-forward layers)

- h = 8 (number of attention heads)

- d_k = d_v = 64 (dimensionality of keys and values)

- Dropout rate of 0.1 was applied to the output of each sub-layer and to embeddings and positional encodings

Ablation Studies from the Paper

The authors conducted several experiments to validate design choices:

- Varying model size: Performance improved with both d_model and d_ff

- Attention heads: They found that multiple attention heads were better than a single head

- Attention vs. relative position: They experimented with relative positional representations but found similar results

Applications and Extensions

Since its introduction, the Transformer architecture has been the foundation for numerous breakthrough models:

- BERT (Bidirectional Encoder Representations from Transformers): Uses only the encoder portion for bidirectional context understanding

- GPT (Generative Pre-trained Transformer): Uses only the decoder portion for autoregressive text generation

- T5 (Text-to-Text Transfer Transformer): Frames all NLP tasks as text-to-text problems

- Vision Transformer (ViT): Adapts Transformers for image classification by treating image patches as sequence tokens

Limitations and Challenges

Despite its success, the Transformer has some limitations:

- Quadratic complexity: The self-attention mechanism scales quadratically with sequence length, making it computationally expensive for very long sequences

- Positional encoding limitations: The fixed positional encodings may not capture position information as effectively as recurrent architectures

- Limited inductive bias: Without the sequential bias of RNNs or the spatial locality bias of CNNs, Transformers may require more data to learn patterns

Addressing the Complexity Issue

Several approaches have been proposed to address the quadratic complexity issue:

- Sparse Attention: Only attend to a subset of positions

- Linear Attention: Reformulate attention to achieve linear complexity

- Local Attention: Restrict attention to local neighborhoods

- Longformer/BigBird: Use a combination of local, global, and random attention patterns

Conclusion

The Transformer architecture represents a paradigm shift in sequence modeling. By replacing recurrence and convolutions with self-attention, it achieves state-of-the-art results while being more parallelizable and requiring fewer parameters. Its success has led to a new generation of pre-trained models that have pushed the boundaries of what’s possible in natural language processing and beyond.

The fundamental principles of the Transformer—self-attention, multi-head attention, and position-wise feed-forward networks—have proven to be remarkably versatile and effective across domains, cementing its place as one of the most significant architectural innovations in deep learning.

References

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2017). Attention is all you need. Advances in Neural Information Processing Systems, 30.

- Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2018). BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

- Radford, A., Narasimhan, K., Salimans, T., & Sutskever, I. (2018). Improving language understanding by generative pre-training.

- Raffel, C., Shazeer, N., Roberts, A., Lee, K., Narang, S., Matena, M., Zhou, Y., Li, W., & Liu, P. J. (2020). Exploring the limits of transfer learning with a unified text-to-text transformer. Journal of Machine Learning Research, 21(140), 1-67.

- Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., Uszkoreit, J., & Houlsby, N. (2020). An image is worth 16×16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929.