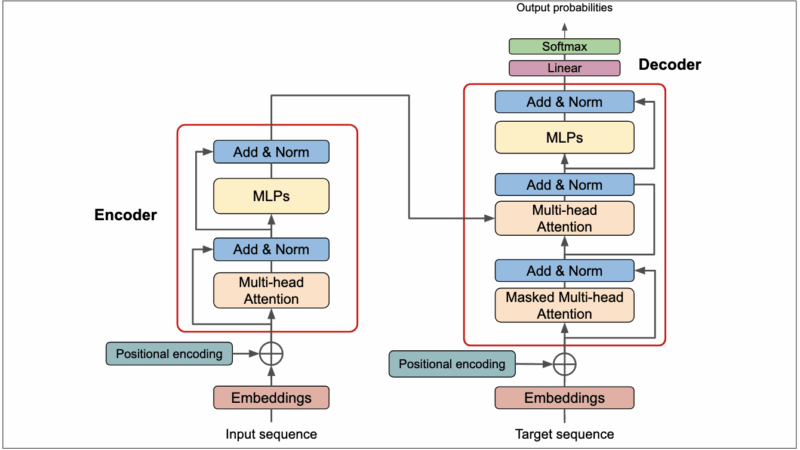

The Complete Transformer Architecture: A Deep Dive

The Transformer architecture revolutionized natural language processing when it was introduced in the landmark 2017 paper “Attention Is All You Need” by Vaswani et al. This blog post explores the complete architecture, breaking down each component to provide a thorough understanding of how Transformers work. The Transformer Architecture Input Embedding Output Embedding Positional Encoding Positional […]

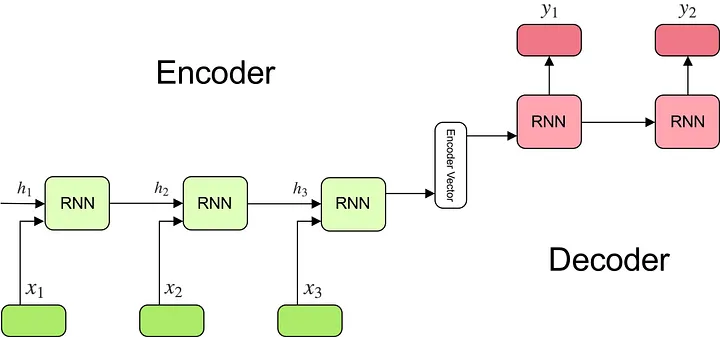

Deep Dive into Encoder-Decoder Architecture: Theory, Implementation and Applications

Introduction The encoder-decoder architecture represents one of the most influential developments in deep learning, particularly for sequence-to-sequence tasks. This architecture has revolutionized machine translation, speech recognition, image captioning, and many other applications where input and output data have different structures or lengths. In this blog post, we’ll explore: Table of Contents <a name=”fundamentals”></a> 1. Fundamentals […]

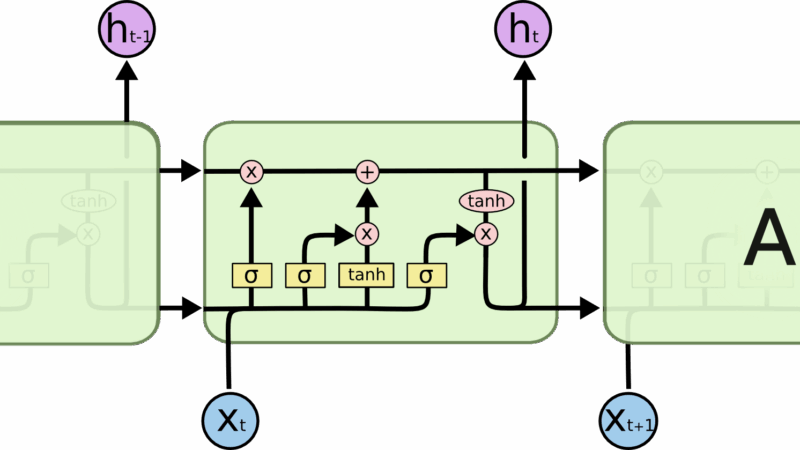

Understanding LSTM Networks with Forward and Backward Propagation Mathematical Intuitions

Developed by : tejask0512 Recurrent Neural Networks Humans don’t begin their thought process from zero every moment. As you read this essay, you interpret each word in the context of the ones that came before it. You don’t discard everything and restart your thinking each time — your thoughts carry forward. Conventional neural networks lack […]

Types of Recurrent Neural Networks: Architectures, Examples and Implementation

Recurrent Neural Networks (RNNs) are powerful sequence processing models that can handle data with temporal relationships. Unlike traditional feedforward neural networks, RNNs have connections that form directed cycles, allowing them to maintain memory of previous inputs. This makes them particularly effective for tasks involving sequential data like text, speech, time series, and more. In this […]

Deep Learning For NLP Prerequisites

Understanding RNN Architectures for NLP: From Simple to Complex Natural Language Processing (NLP) has evolved dramatically with the development of increasingly sophisticated neural network architectures. In this blog post, we’ll explore various recurrent neural network (RNN) architectures that have revolutionized NLP tasks, from basic RNNs to complex encoder-decoder models. Simple RNN: The Foundation What is […]

Comprehensive Guide to NLP Text Representation Techniques

Natural Language Processing (NLP) requires converting human language into numerical formats that computers can understand. This guide explores major text representation techniques in depth, comparing their strengths, weaknesses, and practical applications. 1. One-Hot Encoding One-hot encoding is a fundamental representation technique that forms the conceptual foundation for many text representation methods. How It Works One-hot […]

Text Mining with Regex

🔍 Text Mining & Regex in Feature Engineering Unlocking Value from Unstructured Text Data 🧠 What is Text Mining? Text Mining (also called Text Analytics) is the process of deriving useful insights, patterns, and structure from unstructured textual data. Since over 80% of real-world data is unstructured (emails, chats, reviews, social media, documents), text mining […]